Attention Is All You Need

The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an encoder-decoder configuration. The best performing models also connect the encoder and decoder through an attention mechanism. We propose a new

arxiv.org

0. Abstract

The dominant sequence transduction models are based on complex recurrent or convolutional neural networks that include an encoder and a decoder.

The best performing models also connect the encoder and decoder through an attention mechanism.

propose a new architecture, the Transformer, based solely on attention mechanisms

show these models are more parallelizable, generalizable and requiring significantly less time to train

1. Introduction

RNN's sequential computation precludes parallelization within training examples,

- which becomes critical at longer sequence lengths, as memory constraints limit batching across examples

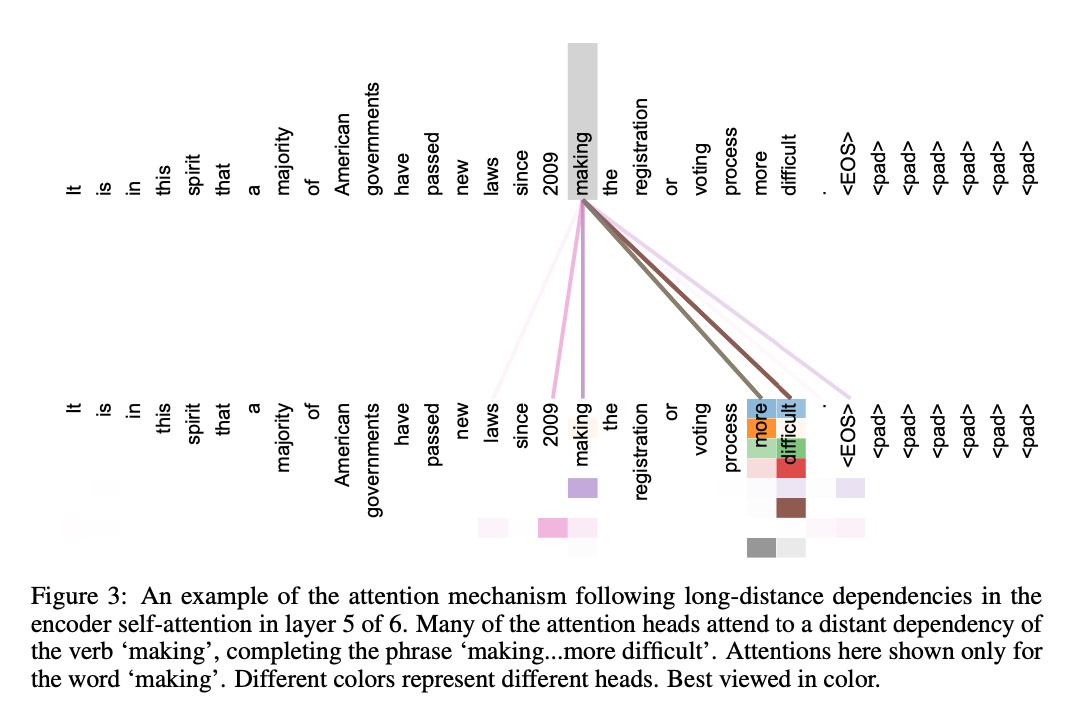

Attention allowed modeling of dependencies without regard to their distance in the input or output sequences

- However, attention mechanisms are used in conjunction with a recurrent network

Transformer, a model architecture eschewing recurrence and instead relying entirely on an attention mechanism to draw global dependencies between input and output

2. Background

Trials to reduce RNN's sequential computation: ConvS2S, ByteNet

- the number of operations required to relate signals from two arbitrary input or output positions grows in the distance between positions

In Transformer, this is reduced to a constant number of operations

3. Model Architecture

The Illustrated Transformer

Discussions: Hacker News (65 points, 4 comments), Reddit r/MachineLearning (29 points, 3 comments) Translations: Arabic, Chinese (Simplified) 1, Chinese (Simplified) 2, French 1, French 2, Italian, Japanese, Korean, Persian, Russian, Spanish 1, Spanish 2,

jalammar.github.io

Transformer follows encoder-decoder structure and is auto-regressive model (consume previously generated symbols as additional input and generate next)

3.1 Encoder-Decoder

3.1.1 Encoder

z_1 = multi-head self attention(input x)

z_2 = LayerNorm(input x + z_1)

- + : residual connection

z_3 = LayerNorm(z_2 + FCN(z2))

3.1.2 Deocder

Keys and Values from Encoder Stack's final output is feeded into every decoder block.

Masked Multi-head attention prevents positions from attending to subsequent positions (position i can depend only on the known outputs at positions less than i)

3.2 Attention

3.2.1 Sclaed Dot-Product Attention

The input consists of queries and keys of dimension dk, and values of dimension dv.

Compute the dot products(compatibility function) of the query with all keys

divide each by scaling factor(d_k^-1/2) and apply softmax

* for large values of dk, the dot products grow large in magnitude, pushing the softmax function into regions where it has extremely small gradients. To counteract this effect, we scale the dot products by scaling factor

* Multiplying input vector by the Wq, Wk, Wv weight matrix produces q,k,v

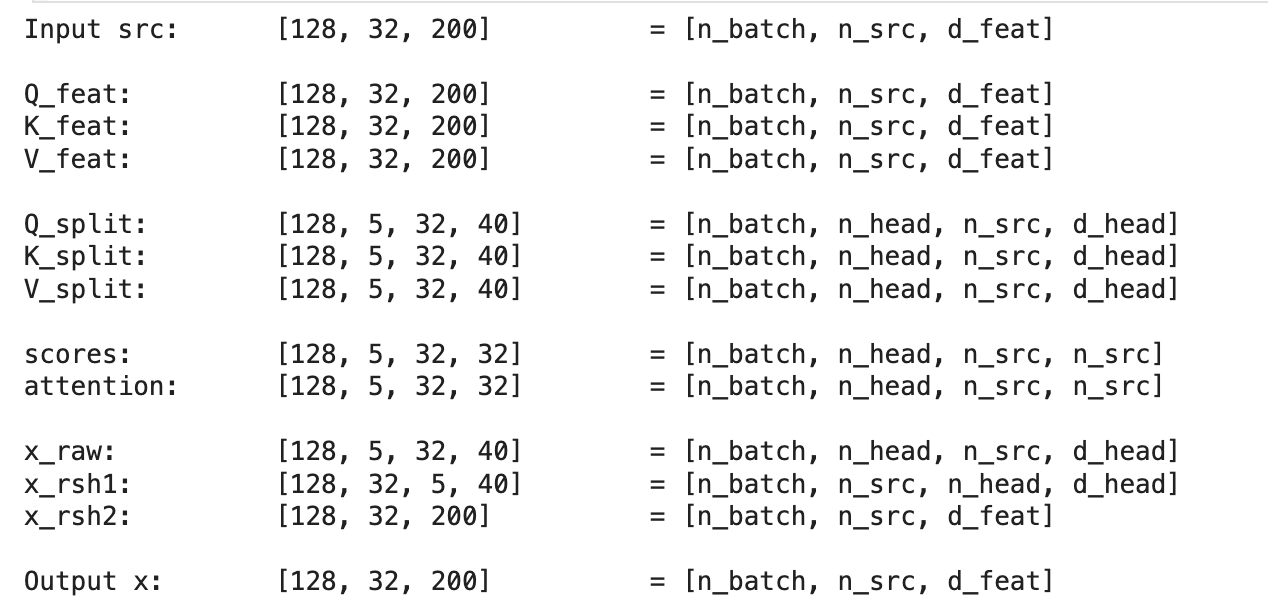

3.2.2 Multi-Head Attention

linearly project the queries, keys and values h times with different, learned linear projections to dk, dk and dv dimensions, respectively. (produce h number of {dk, dk, dv})

These are concatenated and once again projected, resulting in the final values

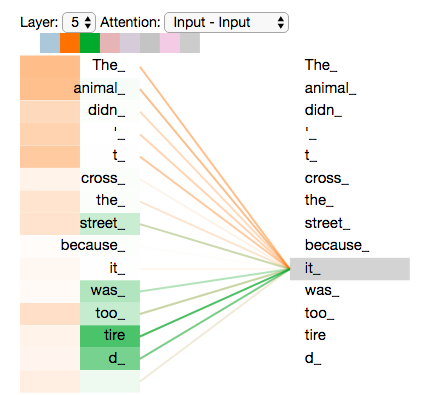

Multi-head attention allows the model to jointly attend to information from different representation subspaces at different positions. With a single attention head, averaging inhibits this.

For each of these we use dk = dv = dmodel/h = 64. Due to the reduced dimension of each head, the total computational cost is similar to that of single-head attention with full dimensionality (dk=512).

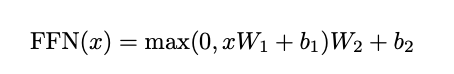

3.3 Position-wise Feed-Forward Network

linear transformation is applied to each position seperately and identically

self.linear1 = nn.Linear(d_model, d_ff)

self.linear2 = nn.Linear(d_ff, d_model)3.4 Embeddings and Softmax

learned linear transformation and softmax function convert the decoder output to predicted next-token probabilities.

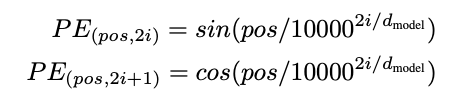

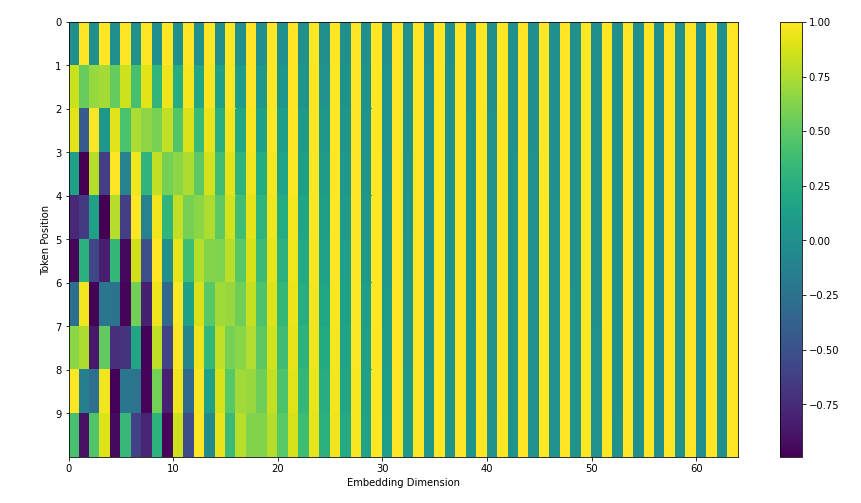

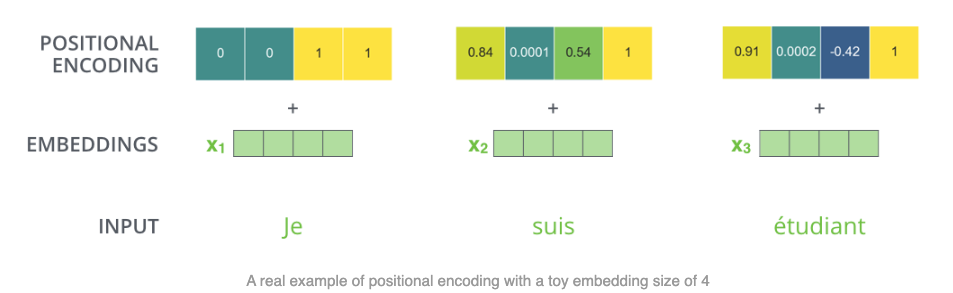

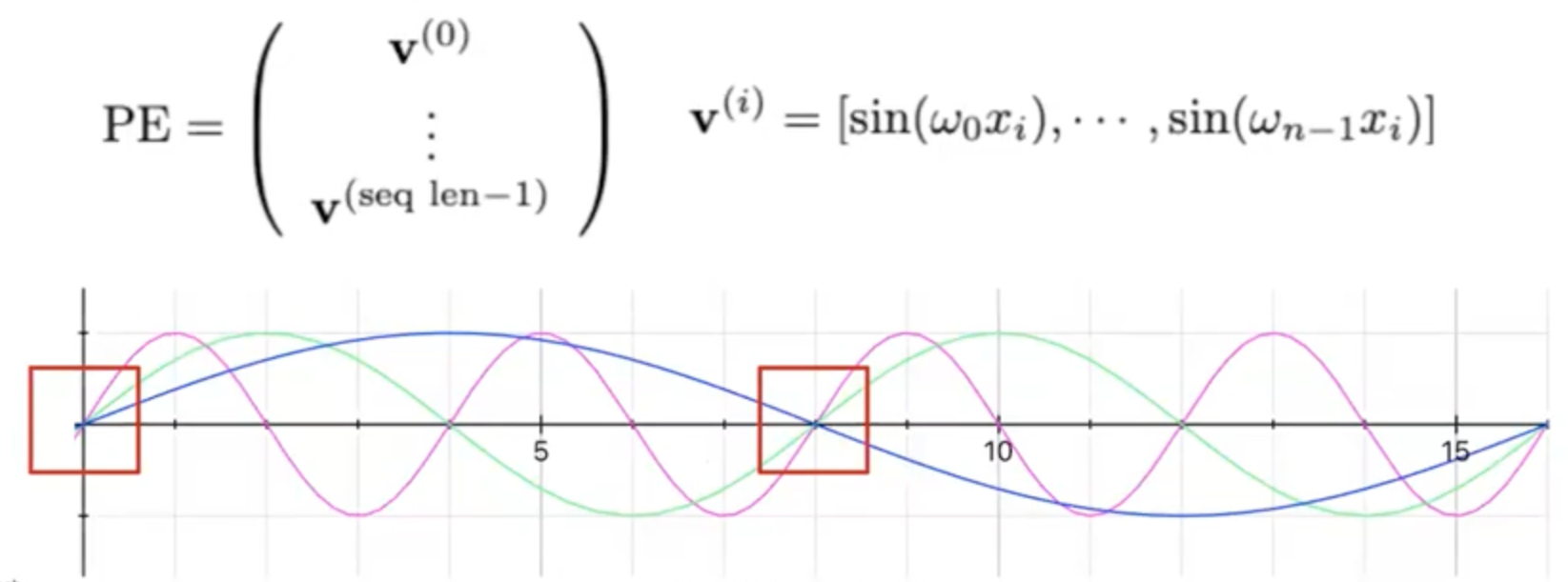

3.5 Positional Encoding

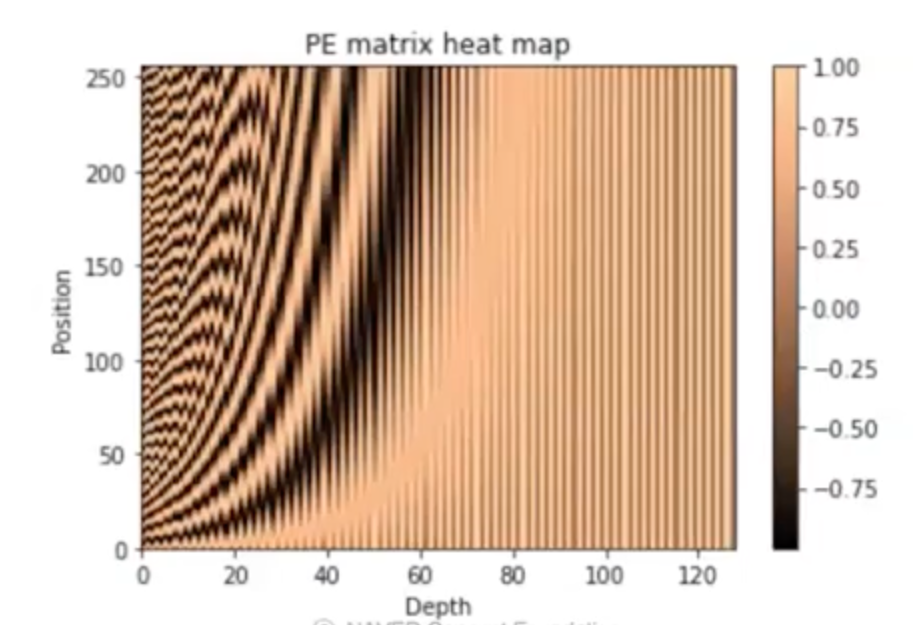

Since our model contains no recurrence and no convolution, in order for the model to make use of the order of the sequence, inject some information about the relative or absolute position of the tokens in the sequence

The wave lengths form a geometric progression from 2π to 10000 · 2π. We chose this function because we hypothesized it would allow the model to easily learn to attend by relative positions

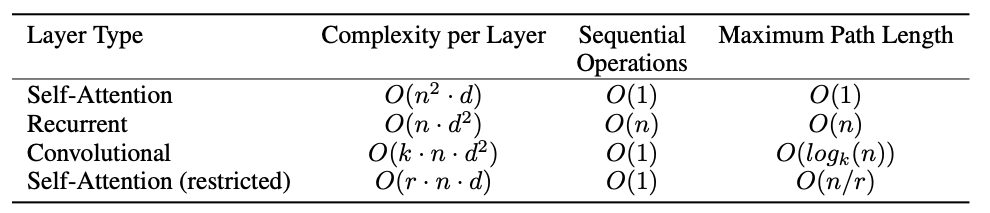

4. Why Self-Attention

computational complexity per layer

- Convolution : n postion * kernel_size * d * d(channel size, output sould be same with input shape)

compuation that can be parallelized (min of sequential operations)

the length of the paths forward and backward signals have to traverse in the network (The shorter, the easier model to learn long-range dependencies)

*self-attention(restricted) consider only a neighborhood of size r

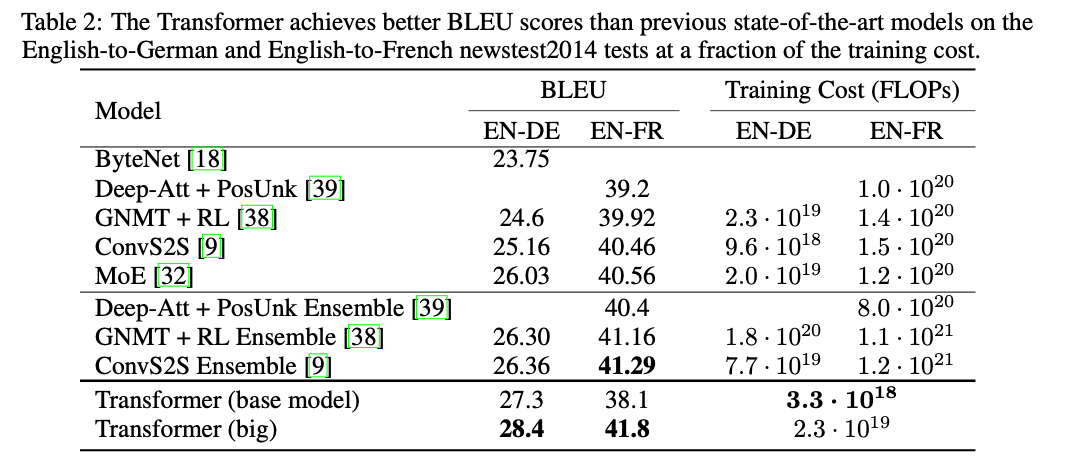

6. Results

6.1 Machine Translation

6.2 Model Variations

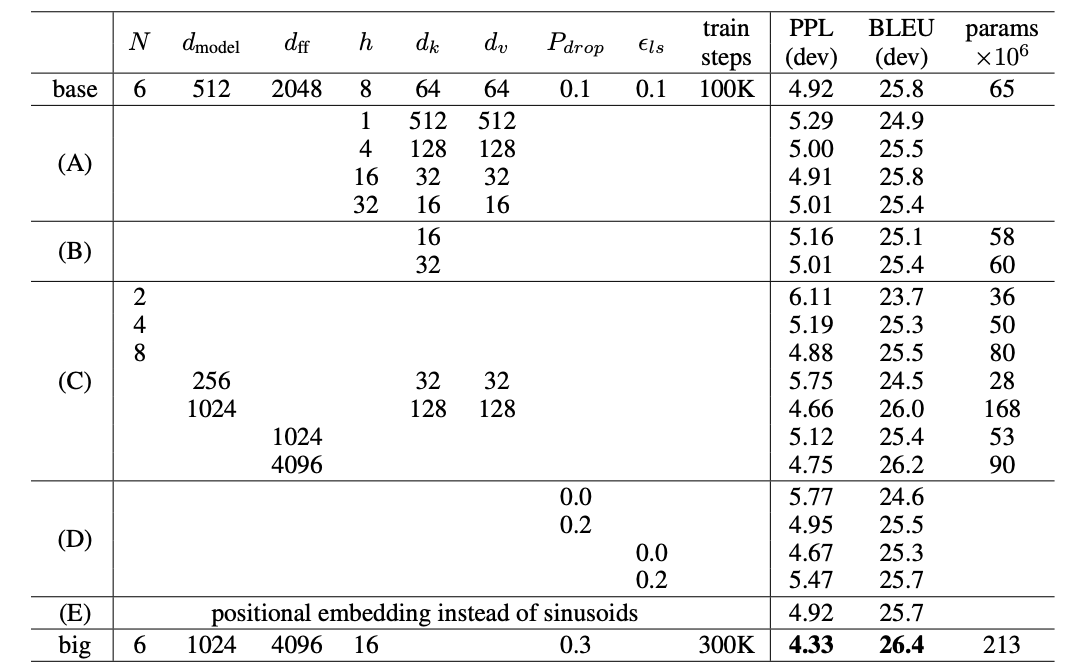

Transformer architecture에서 head수, d_k, d_v 크기와 같은 조건들의 변형들을 실험함으로써 각 component의 중요도를 관찰해보았다.

(A) 적절한 수의 head를 찾아야 한다.

(B) attention key size를 줄였을 때 성능하락을 발견했다. 차원이 적을 수록 더 compatibility를 계산하기 어렵다. dot product외에 다른 방법이 효과적일수도 있다.

(C)(D) 큰 모델이 성능이 더 좋고 dropout이 오버피팅을 방지해 효과적이였다.

(E) learned positional embedding, sinusoidal positional embedding의 유의미한 차이 발견 없음

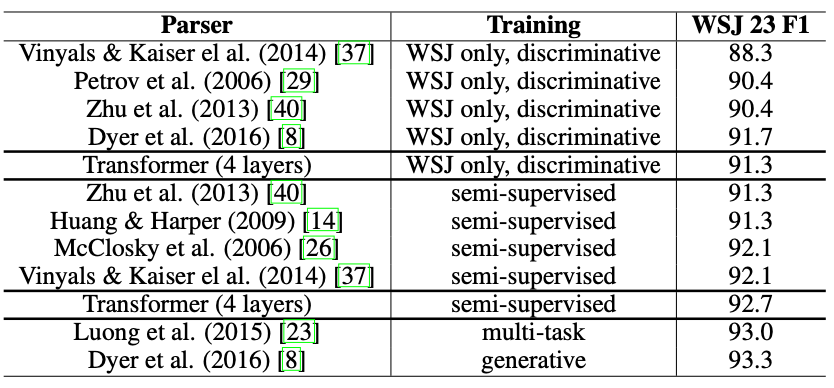

6.3 English Constituency Parsing

Transformer가 다른 task에서도 잘 동작하는지를 알아보기 위해 Engllish Constituency Parsing에서 실험을 해보았다. 옵션에 대한 세세한 튜닝 없이도 매우 좋은 성능을 보여주었다.

7. Conclustion

The first sequence transduction model based entirely on attention.

Faster on training than recurrent or convoluation layers.

Appendix.

As side benefit, self-attention could yield more interpretable models

Further Studies

1. 왜 sine과 cosine 함수를 함께 쓰는가?

sequence길이 n개 만큼의 sine함수들로 positional vector를 만들면 주기마다 동일한 positional vector가 만들어진다. 이를 방지하기 위해 두 주기함수를 결합한 방식을 사용한다.

2. 만약 모델이 최대 250 길이로 학습되었다면 251길이의 문장이 입력으로 들어왔을 때는 251 번째의 position embedding을 본 적이 없다.

따라서 토큰들의 상대위치를 고려하는 embedding방식인 relative positional embedding이 활용될 수 있다. T5에서는 이 방식이 적용되었다.

3. Multi-head Attention 연산 과정 설명