- sequence data(소리, 문자열, 주가 등)는 순서가 중요

- sequence 데이터는 i.i.d(독립동일분포)를 위반하기 쉽다.

- 개가 사람을 물었다. 사람을 개가 물었다. 이렇게 순서만 바꾸더라도 sequence data의 확률분포가 달라진다.

* i.i.d : independently and identically distributed

- 주사위를 20번 던진다. {x1, x2, ...., x20}

- 각 확률 변수 xi는 서로 독립이다.

- 각 확률 변수 xi는 같은 분포를 따른다 (marginal distribution이 같다.)

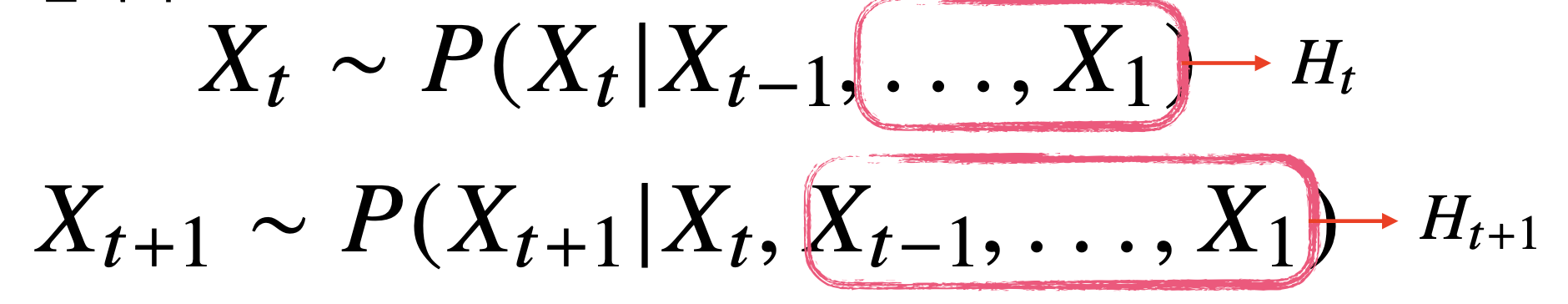

- 베이즈 법칙에 따라서 conditional probability로 (x1,...,xt-1)이 주어 졌을 때 그 다음에 Xt의 확률을 구할 수 있다.

- 즉 이전 sequence를 가지고 앞으로 발생할 사건의 확률분포를 구할 수 있다.

- 1. 과거의 모든 데이터를 사용 할수도 있고

- 2. 꼭 과거의 모든 sequence 중 고정된 τ 만큼의 sequence를 사용할 수도 있고

- 3. 이렇게 1부터 Xt-2까지를 하나의 hidden state로 묶어서 항상 고정된 길이로 만든 후에 구할 수도 있다. 이게 바로 rnn이다.

Semantics means the sequence of words, like which word was spoken before or after a word.

In RNN we keep the information of sequence so that a meaningful sentence can be made, translated or interpreted.

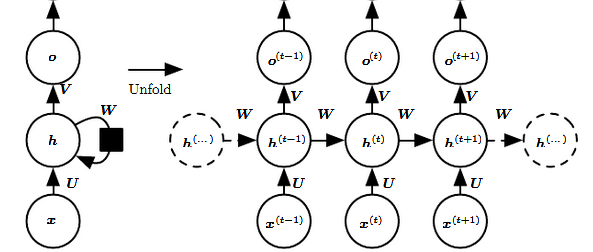

RNNs are called recurrent

1. they perform the same task for every element of a sequence, with the output being depended on the previous computations.

2. Another way to think about RNNs is that they have a “memory” which captures information about what has been calculated so far.

(left side)

notation of an RNN

(right side)

an RNN being unrolled (or unfolded) into a full network.

if the sequence we care about is a sentence of 3 words, the network would be unrolled into a 3-layer neural network, one layer for each word.

Input : For example, xt could be a one-hot vector corresponding to a word of a sentence.

Hidden state :

- h(t) represents a hidden state at time t and acts as “memory” of the network.

- h(t) is calculated based on the current input and the previous time step’s hidden state:

- h(t) = f(U x(t) + W h(t−1)). The function f is taken to be a non-linear transformation such as tanh, ReLU.

Weights:

input to hidden connections parameterized by a weight matrix U,

hidden-to-hidden recurrent connections parameterized by a weight matrix W,

and hidden-to-output connections parameterized by a weight matrix V

and all these weights (U,V,W) are shared across time.

Output:

In the figure, There is an arrow after o(t) which is also often subjected to non-linearity, especially when the network contains further layers downstream.

Backpropagation Through Time : https://jsdysw.tistory.com/429

BPTT for RNN

Back Propagation Through Time (BPTT) States computed in the forward pass must be stored until they are reused during the backward pass, so the memory cost is also O(τ). Loss function Let's say we are using cross-entropy loss Derivative of loss with respec

jsdysw.tistory.com

More about RNN : http://karpathy.github.io/2015/05/21/rnn-effectiveness/

The Unreasonable Effectiveness of Recurrent Neural Networks

There’s something magical about Recurrent Neural Networks (RNNs). I still remember when I trained my first recurrent network for Image Captioning. Within a few dozen minutes of training my first baby model (with rather arbitrarily-chosen hyperparameters)

karpathy.github.io

'boostcamp AI tech > boostcamp AI' 카테고리의 다른 글

| Bayes Theorem (0) | 2023.11.10 |

|---|---|

| BPTT for RNN (0) | 2023.08.26 |

| Convolutional Neural Network (0) | 2023.08.23 |

| Autoencoder (0) | 2023.08.17 |

| Cost functions and Gradient descent (0) | 2023.08.17 |