Interventional Speech Noise Injection for ASR Generalizable Spoken Language Understanding

Recently, pre-trained language models (PLMs) have been increasingly adopted in spoken language understanding (SLU). However, automatic speech recognition (ASR) systems frequently produce inaccurate transcriptions, leading to noisy inputs for SLU models, wh

arxiv.org

0 Abstract

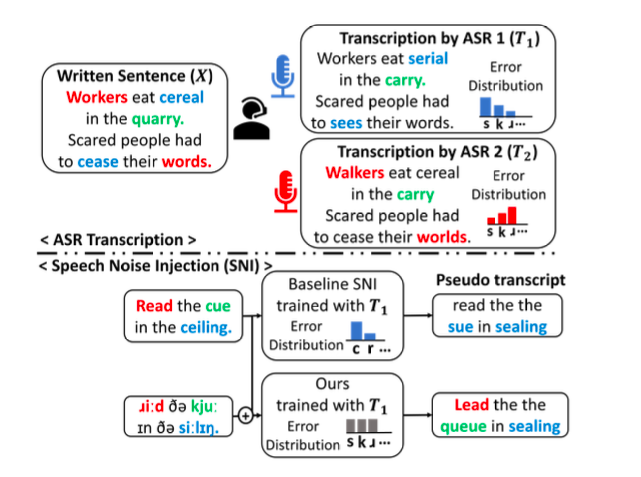

1. ASR errors are propagated to SLU models.

2. train SLU(Spoken Language Understanding) models to withstand ASR errors with ASR-plausible noises observed in ASR systems.

3. Speech Noise Injection(SNI) is a method to generate likely incorrect transcriptions which will be used in SLU training.

4. Different ASR systems generate different ASR errors (ASR1 : blue, ASR2 : red, common, or, ASR∗ : green).

5. Biased toward a specific ASR1, baseline SNI generates noises plausible only for ASR1

6. SLU model trained with the generated noises struggles with the errors from ASRj

7. Our method, ISNI, generates ASR*-plausible noises(red, green, blue) even though it is trained on ASR1

- propose building an SNI model, F∗, capable of generating “ASR∗-plausible” pseudo transcripts T∗, which are plausible for any ASR system, ASR∗

1 Introduction

1. inaccuracies as ASR error words, which are phonetically similar but semantically unrelated impede the semantic understanding of speech in SLU tasks

2. speech noise injection (SNI) which generates likely incorrect transcriptions then exposes PLMs to the generated pseudo transcriptions while training SLU tasks.

- attempt to replicate the ASR errors from written text then generate training samples for LM

3. However, their ASR-plausibility is conditional on a particular ASR system, where the error distribution from the collected transcriptions follows only the observed error distribution

- different ASR systems have distinct error distributions, which hinders the trained SLU models to be used with other ASR systems

4. we introduce better generalizable noises from a single ASR system by

- interventional noise injection through "do-calculus"

- phoneme-aware generation

2 Related Work

2.1 SNI

1. uses a TTS engine to convert text into audio, which is then transcribed by an ASR system into pseudo transcriptions.

- different error distributions between human and TTS-generated audio

- less representative of actual ASR errors.

2. textual perturbation, involves replacing words in text with noise words using a scoring function that estimates how likely an ASR system is to misrecognize the words

- often employ phonetic similarity functions etc.

3. auto-regressive generation, utilizes PLMs like GPT- 2 or BART to generate text that mimics the likelihood of ASR errors in a contextually aware manner, producing more plausible ASR-like noise

- it has shown superior performance over other categories -> our baseline

2.2 ASR Correction

1. As an alternative to SNI, ASR correction aims to denoise (possibly noisy) ASR transcription Ti into X (detects ASR errors, then corrects)

2. introduces additional latency in the SLU pipeline for ASR correction before the SLU model to conduct SLU tasks

3. the innate robustness of PLMs makes SNI outperforms ASR correction.

3 Method

1. Formally define the problem of SNI and outline its causal diagram.

3.1 Problem Formulation

1. Fi : X -> Ti

- SNI model mimics the transcriptions of ASRi

2. given the written ground truth X with n words as input

3. the SNI model Fi outputs the pseudo transcription of ASRi

4. For each word, xk, zk indicates whether ASRi makes errors for not.

5. However, noises generated by this model(trained with ASRi outputs) differ from those of the other ASRj , and SLU model trained with the generated noises struggles with the errors from ASRj

6. We try to build SNI model F*, capable of generating “ASR∗-plausible” pseudo transcripts T∗

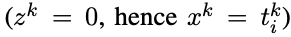

3.2 Causality in SNI

1. In ASR transcription, X is first spoken as audio A, which is then transcribed as transcription T by ASRi, Depending on the audio A, ASRi may make errors (Z = 1) or not (Z = 0) in the transcription T.

- X influences Z through causal paths

- X → T : There is a direct causal relationship where the clean text X influences the transcribed text T .

- Z→T: If zk ∈Z is 1(an error occurs), it directly affects the corresponding transcription tki, causing it to deviate from the clean text xk.

X: "I want to book a flight to Paris."

T: "I want to book a light to Paris."

zk : Phonetic substitution ("flight" → "light").

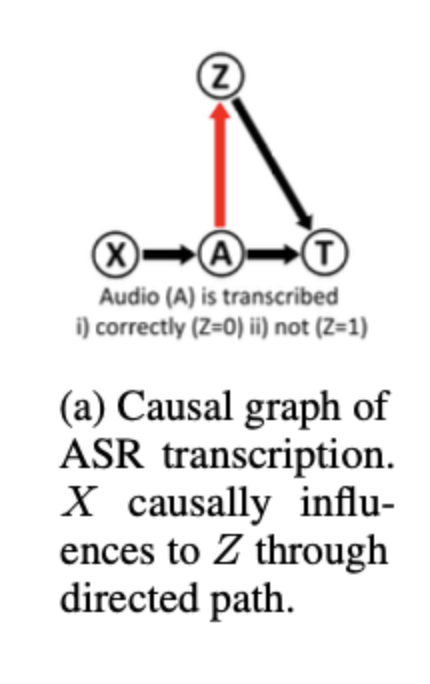

2. In the SNI training data collection process, the X and T transcribed by ASRi are filtered based on Z since they do not exhibit the error patterns required for training.

- such filtering induces a backdoor path and a non-causal relation in SNI.

- Z → X: Z determines if X is included. This means that only when the ASR system makes a mistake, indicated by any value zk ∈ Z being 1, the corresponding text is included in the training data.

- So, errors by the ASR system decide which clean texts are chosen.

- The backdoor path X ← Z → T , while ensuring that only instances where ASRi made errors are included, introduces bias in previous SNI models, while training, based on conventional likelihood

3. zk conversely influences xk and acts as a confounder(원인과 결과에 동시에 영향을 주는 변수).

Workers eat cereal -> Walkers eat cereal (selected as SNI training set)

Workers are too lazy -> Workers r too lazy.(selected as SNI training set)

Workers are always unhappy -> Workers are always unhappy (filtered out)

P(Workers -> Walkers|xk) = 1/3

SNI is trained with

Workers eat cereal -> Walkers eat cereal

Workers are too lazy -> Workers r too lazy.

P(zk|xk) = 1/2

When generating pseudo transcription, substitute Workers with Walkers

Workers are lazy -> Walkers are lazy

SNI thinks P(zk|xk) = 1/2

such distortion skews the prior probability P(zk|xk)

=> SNI reinforces patterns already present in ASRi

=> SNI is overfitted to ASRi

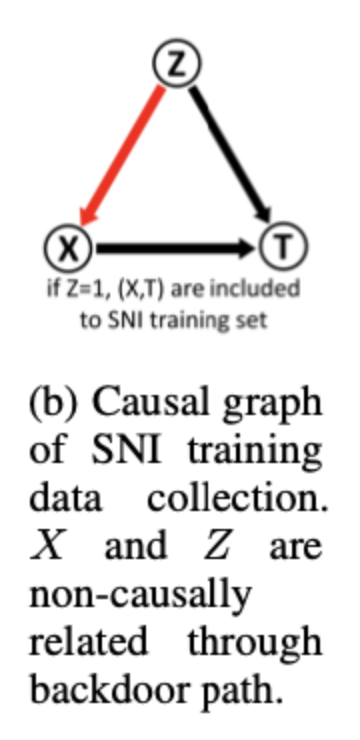

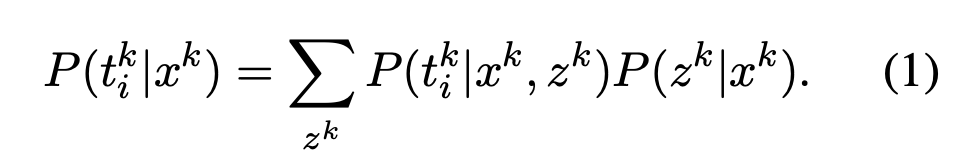

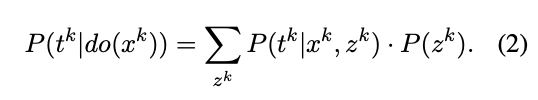

3.3 do-caculus for ISNI

1. To mitigate biases in SNI toward the ASRi, we propose interventional SNI (ISNI) where the non-causal relations are cut off by adopting do-calculus.

2. Conventional likelihood (SNI model)

- P(tki∣xk): The probability of the transcription tki given the original input xk

- P(zk∣xk): The probability of a specific noise pattern zk occurring, given the input xk. This models the likelihood of different noise types.

- : The probability of tki, given xk and a specific noise pattern zk. This models how zk influences the transcription.

- The summation over zk accounts for all possible noise patterns that could have affected the transcription tki

3. do(xk) means breaking all other dependencies on xk

- if X and Z are independent where arrow pointing into X from Z is removed, P(z|do(X)) = P(z)

- non-causal path Z → X is cut off

- z doen't depend on x any more so every z over xks are same.

4. do calculus makes SNI to deviate observed distribution from ASRi thereby broadening the error patterns in the resulting pseudo transcripts

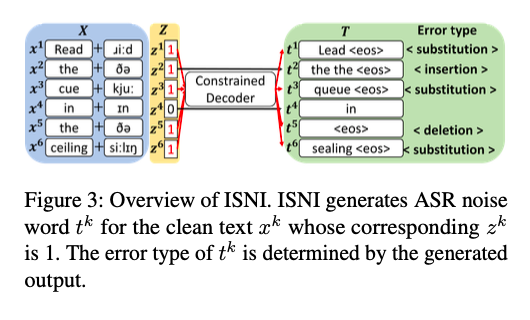

3.4 Overview of Our Proposed Approach

1. ISNI implements two distinct terms in Eq. 2: P(zk) and P(tk|xk,zk)

2. For debiased P(zk), explicitly controls zk and removes the dependence on xk by implementing SNI with constrained decoding

3. For ASR*-plausible generation P(tk|xk,zk), provide the phonetic information of x (e.g., cereal->serial 로 들릴만 하네~)

3.5 Interventional SNI for Debiasing

3.5.1 cut off z->x, x-> z

1. debiased P(zk) represent the probability that any given word xk will be corrupted

- ensures that the corruption of words xk in the pseudo transcripts does not depend on any particular ASR system

- it allows for the corruption of words that are typically less susceptible to errors under ASRi .

2. To simulate P(z), we assume that the average error rates of any ASR system, ASR∗ would be equal for all words

3. sample a random variable ak for each word xk from a confinuous uniform distribution over the interval [0,1]

- If ak ≤ P (z), we set z to 1, indicating an incorrect transcription of xk and generating its noise word tk .

- Otherwise, z is set to 0, and tk remains identical to xk

4. prior probability P(z) as a constant hyperparameter for pseudo transcript generation

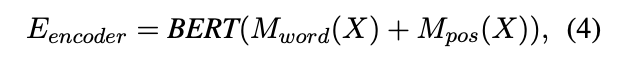

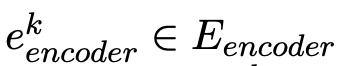

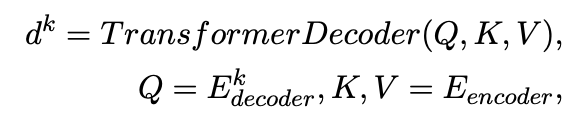

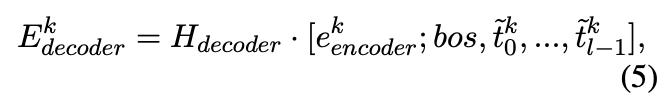

3.5.2 Model architecture

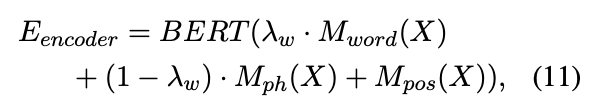

1. ek_encoder is encoder representation of the token xk ∈ X and Mword and Mpos denote word and position embeddings.

2. compute hidden representation dk where Ek_decoder serves as query Q, and E_encoder is used for both key K and value V in the transformer

Let's see the process of circulstance below:

Read the cue in the ceiling

-> SNI ->

Read the the queue in the ceiling

-----------------------------------------

byrandom sampling z was

z1 = 1

z2 = 1

else 0

-----------------------------------------

iter1)

[the, bos] -> [the, bos, the] -> [the, bos, the, the] -> [the, bos, the, the, eos].

iter2)

[cue, bos] -> [cue, bos, queue] -> [cue, bos, queue, eos].3. Ek_decoder

- bos : special embedding vector, beginning of sequence

- H_decoder : weight matrix of the hidden layer in decoder

- {tk0, ... , tkl-1} : tokens generated so far

the is replaced by the the

step 1)

decoder input [xk, bos]

decoder output [the]

step 2)

decoder input [xk, bos, the]

decoder output [the]

step 3)

decoder input [xk, bos, the, the]

decoder output [eos]

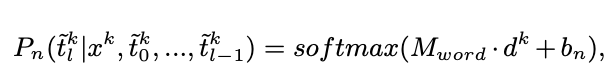

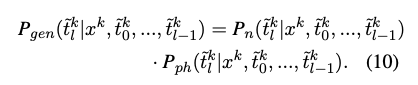

done)4. the probability of generating each token is

- M_word : word embedding matrix

- bn : trained bias

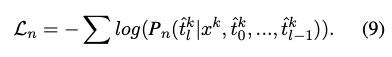

5. use asr transcription T(t hat) from ASRi to train ISNI model.

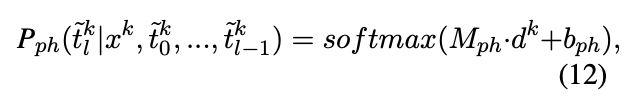

3.6 Phoneme-aware Generation for Generalizability

1. adjust the generation probability Pn with the phoneme-based generation probability Pph so that the ASR∗-plausible noise words can be generated as followed

2. Phoneme embedding Mph is incorporated into the input alongside word and position embeddings as follows

- λw is a hyperparameter that balances the influence of word embeddings and phoneme embeddings.

- Fed both the word and phoneme embedding, the decoder then can understand the phonetic information of both the encoder and decoder input.

- Mph : phoneme embedding matrix

- Pph : how much tk is close to xk

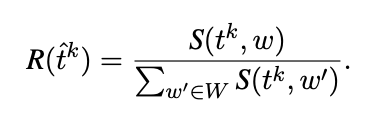

3. To supervise Pph, phonetic similarity should be formulated as a probability distribution.

- S(tk, w) : distance between tk and phonetic code of xk

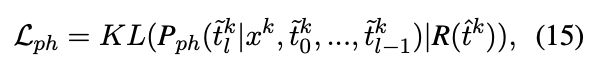

4. Pph is supervised by KL divergence loss

5. Finally ISNI is optimized to jointly minimize the total loss Ltot

- λph is hyperparameter for the weight of Lph

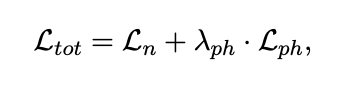

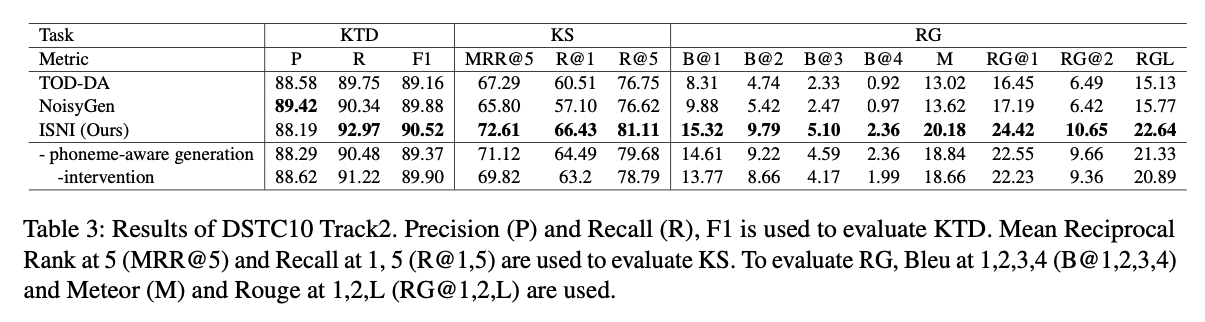

4. Experiments

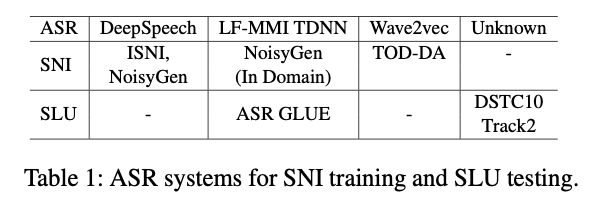

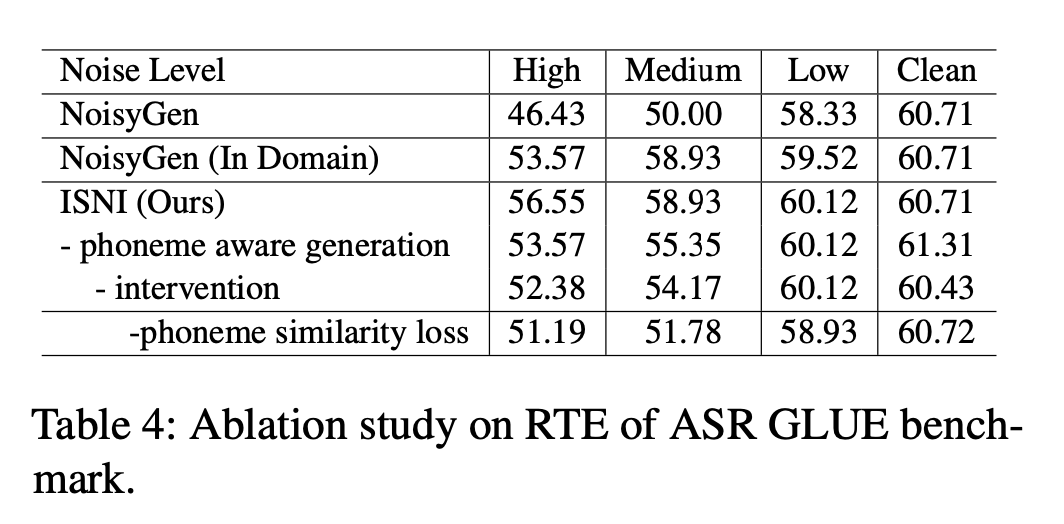

- The ASR-GLUE benchmark is a collection of 6 different NLU (Natural Language Understanding) tasks for evaluating the performance of PLMs under automatic speech recognition (ASR) error across 3 different levels of background noise and 6 speakers with various voice characteristics.

- TOD-DA is trained on Wave2vec

- One NoisyGen is trained on LF-MMI TDDN and the other is on DeepSpeech

- Eval of NoisyGen (trained with LF-MMI TDDN) with ASR GLUE is performed on in-domain

- For PLM, BERT-base is used for ASR GLUE and GPT2-small for DSTC10 Tracks

- NoisyGen is consistently outperformed by NoisyGen (In Domain) in every task. -> existing auto-regressive generation-based SNI models struggle to generalize in the other ASR systems.

- ISNI can robustify SLU model in the ASR zero-shot setting.

- ISNI surpassed NoisyGen in every task in every noise level even NoisyGen (In Domain) in high and medium noise levels for QNLI and in low noise levels for SST2.

This result demonstrates how phoneme-aware generation improves the robustness of SLU models in noisy environments.

-phoneme similarity loss : train ISNI without phonetic similarity loss, relying solely on phoneme embeddings.