Zipformer: A faster and better encoder for automatic speech recognition

The Conformer has become the most popular encoder model for automatic speech recognition (ASR). It adds convolution modules to a transformer to learn both local and global dependencies. In this work we describe a faster, more memory-efficient, and better-p

arxiv.org

0. Abstract

The Conformer has become the most popular encoder model for automatic speech recognition. It adds convolution modules to a Transformer to learn both local and global dependencies.

In this work we describe a faster, more memory-efficient, and better-performing Transformer, called Zipformer.

- a U-Net-like encoder structure where middle stacks operate at lower frame rates

- re-organized block structure with more modules, within which we re-use attention weights for efficiency

- a modified form of LayerNorm called BiasNorm allows us to retain some length information in normalization

- new activation functions SwooshR and SwooshL work better than Swish.

- propose a new optimizer, called ScaledAdam, which scales the update by each tensor’s current scale to keep the relative change about the same, and also explictly learns the parameter scale. It achieves faster convergence and better performance than Adam.

1. Introduction

| Conformer | Zipformer |

| operates on the sequence at a constant frame rate | adopts a U-Net-like structure |

| conformer block | zipformer block, two conformer blocks and reuse attention weights |

| LayerNorm | BiasNorm |

| Swish | SwooshR and SwooshL |

| Adam | a parameter-scale-invariant version of Adam, called ScaledAdam |

- zipformer converges faster during training

- speeds up the inference by more thatn 50% compared to previous studies while requiring less GPU memory.

- state of the art results.

2. Related Work

- depthwise separable convolutions

- depthwise conv followed by pointwise conv

- for efficiency, less computational cost than general Convolution

- Squeeze-and-excitation : capture longer context

- Transformer : can learn global dependencies based on self-attention

- Conformer : gains powerful capability of modeling both local(CNN) and global(Transformer) contextual information

- Squeezeformer : first who adopted U-Net structure

- Branchformer

- E-Branchformer

** Why does general cnn take more computation than depthwise seperable convolutions?

- I : Input channel, O: Output channel, K : Kernel size , D : Feature map size

- CNN

- => K*K * D*D * I * O

- Depthwise Seperable Convolution

- => (depthwise conv) + (pointwise conv)

- => K*K * I * D*D + I * O * D*D

- divide, (K*K * I * D*D + I * O * D*D)/(K*K * D*D * I * O) = 1/OC + 1/F*F

3. Method

3.1 Downsampled Encoder Structure

- Conv-Embed

- given the acoustic features with frame rate of 100Hz, the convolution-based module called Conv-Embed first reduces the length by a factor of 2

- [N, T, 80] -> [N, (T-3)//2-2, output_channel]

- Downsample & Upsample

- symmetric scaling down and scaling up in sequence length, respectively

- 6 cascaded stacks

- the obtained sequence from above is fed into 6 cascaded stacks to learn temporal representation at frame rates of 50Hz, 25Hz, 12.5Hz, 6.25Hz, 12.5Hz, and 25Hz, respectively

- Different stacks have different embedding dimensions. The output of each stack is truncated or padded with zeros to match the dimension of the next stack

- The final encoder output dimension is set to the maximum of all stacks’ dimensions.

- If the last stack output has the largest dimension, it is taken as the encoder output; otherwise, it is concatenated from different pieces of stack outputs, taking each dimension from the most recent output that has it present. (최근 stack output들부터 concat한다. max dimension이 다 찰때까지)

- Bypass

- learns channel-wise scalar weights c

- input x and module output y

- Bypass : (1 − c) ⊙ x + c ⊙ y

audio input: torch.Size([9, 6023, 80])

audio input + padding: torch.Size([9, 6053, 80])

conv_embed: torch.Size([9, 3023, 192])

permute: torch.Size([3023, 9, 192])

output of stack#1: torch.Size([3023, 9, 192])

convert num channel: torch.Size([3023, 9, 256])

output of stack#2: torch.Size([3023, 9, 256])

bypass: torch.Size([3023, 9, 256])

convert num channel: torch.Size([3023, 9, 256])

output of stack#3: torch.Size([3023, 9, 384])

bypass: torch.Size([3023, 9, 384])

converting num channel: torch.Size([3023, 9, 512])

output of stack#4: torch.Size([3023, 9, 512])

bypass: torch.Size([3023, 9, 512])

converting num channel torch.Size([3023, 9, 384])

output of stack#5: torch.Size([3023, 9, 384])

bypass: torch.Size([3024, 9, 384])

converting num channel: torch.Size([3023, 9, 256])

output of stack#6 : torch.Size([3023, 9, 256])

bypass: torch.Size([3023, 9, 256])

get_full_dim_output : torch.Size([3023, 9, 512])

Last down sampling : torch.Size([1512, 9, 512])

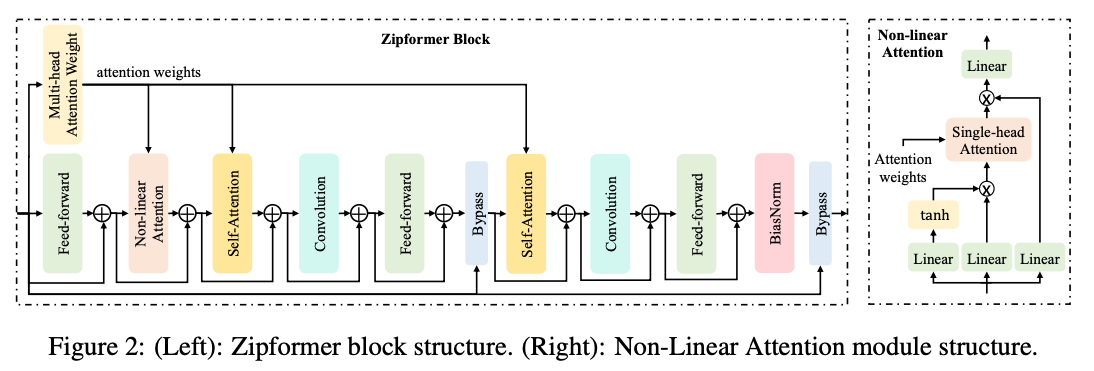

3.2 Zipformer Block

Zipformer has twice the depth of the Conformer block. To save time and memory, re-use the attention weight.

decompose MHSA into two individual modules

- Multi-Head Attention Weight (MHAW) : calculate attention weights

- Self-Attention (SA) : aggregate different frames with these attention weights.

Non-Linear Attention (NLA) : to make full use of the computed attention weights to capture the global information.

## NLA Single head attention

x = x.reshape(seq_len, batch_size, num_heads, -1).permute(2, 1, 0, 3)

# now x: (num_heads, batch_size, seq_len, head_dim)

x = torch.matmul(attn_weights, x)

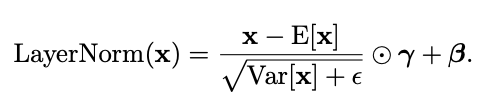

3.3 BiasNorm

Conformer using LayerNorm suffers from two problem

- Model tries to defeat LayerNorm, Channel Dominance

- observation : Conformer model “sets one channel to a large constant value,” like 50. This behavior is likely an adaptive response to the strict normalization of LayerNorm.

- By setting a feature to a large, constant value, the model can retain some of the original scale information of the input despite LayerNorm’s attempt to neutralize it.

- LayerNorm removes the vector’s length by enforcing zero mean and unit variance, effectively "erasing" the scale of input values.

- The γ parameter adjusts the scale of each feature independently, but it doesn’t scale the vector as a whole. This means that the normalized output still has a length (or norm) that’s constrained by the zero mean and unit variance of the LayerNorm operation.

- LayerNorm effectively "erases" the original length information by normalizing to unit variance, and γ only reintroduces a relative scaling across features, not an absolute vector length.

- Dead Modules (Inactive Layers)

- observation : Some parts of the model, particularly feed-forward or convolutional layers, output extremely small values, close to zero, effectively becoming "dead" or inactive because they contribute almost nothing to the model’s final output.

- How?

- 𝛾: Controls the scale (or magnitude) of the normalized output.

- β: Controls the shift (or bias) of the normalized output.

- If 𝛾 becomes close to zero, the output of the layer will be scaled down significantly

- Why?

- During early training, 𝛾 can oscillate around zero so this part of the network stays in a bad local optimum which makes the module stays inactive.

- the inconsistent sign constantly reverses the gradient directions back-propagating to the modules

This preverses some relative scaling and does not destroy magnitude information. allowing the network to carry this information forward without running into dead or inactive module issues.

- b: A learnable, channel-wise bias added to each channel, This RMS term serves as a scaling factor, but unlike in LayerNorm, it does not enforce zero mean or unit variance. Let’s break down what this means and why it matters.

- exp(𝛾) : always positive, avoid the gradient oscillation problem

3.4 SwooshR and SwooshL Activation functions

Swish has a tendency to produce very small gradients (close to zero) when inputs are negative.

SwooshR and SwooshL have non-vanishing slopes for negative inputs.

This means that even when the input is negative, allow the gradients to stay non-zero and prevents the denominator term in Adam-type updates from getting dangerously small.

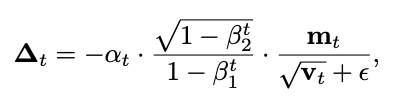

3.5 ScaledAdam Optimizer

parameter update ∆t at step t in Adam, alpha t is learning rate.

it does not account for the scale of each parameter.

Considering the relative parameter change ∆t/rt−1, Adam might cause learning in relative terms too slowly for parameters with large scales, or too fast for parameters with small scales.

scale the update, delta t, by the parameter scale r_t-1

ScaledAdam scales each parameter’s update proportional to the scale of that parameter, and also explicitly learns the parameter scale.

ensuring more consistent relative learning speeds for both large and small parameters.

*Eden Learning Rate Scheduler

The reason for making Eden dependent on both the step index t and the epoch index e is to keep the amount of parameter change after certain amount of training data (e.g., one hour) approximately constant when we change the batch size, so the schedule parameters should not have to be re-tuned if we change the batch size

4. Experiments

4.1 Zipformer Training Details

Speed perturbation

SpecAugment

mixed precision training

activation contraints (Whitener, Balancer)

Pruned transducer, memory efficient version of transducer loss

Beam search of size 4

No language model for rescoring

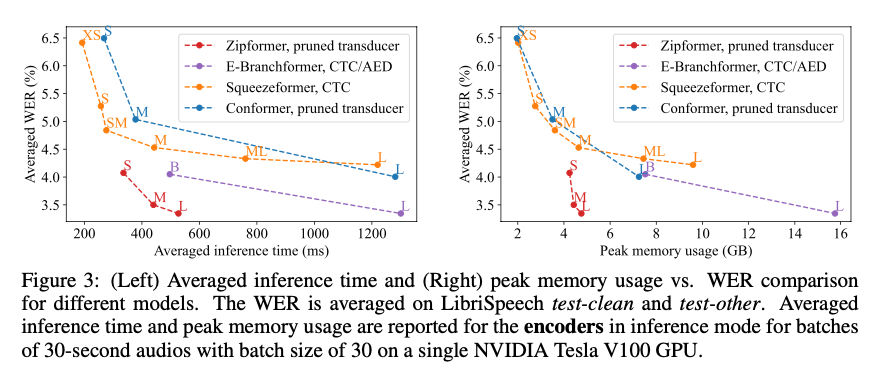

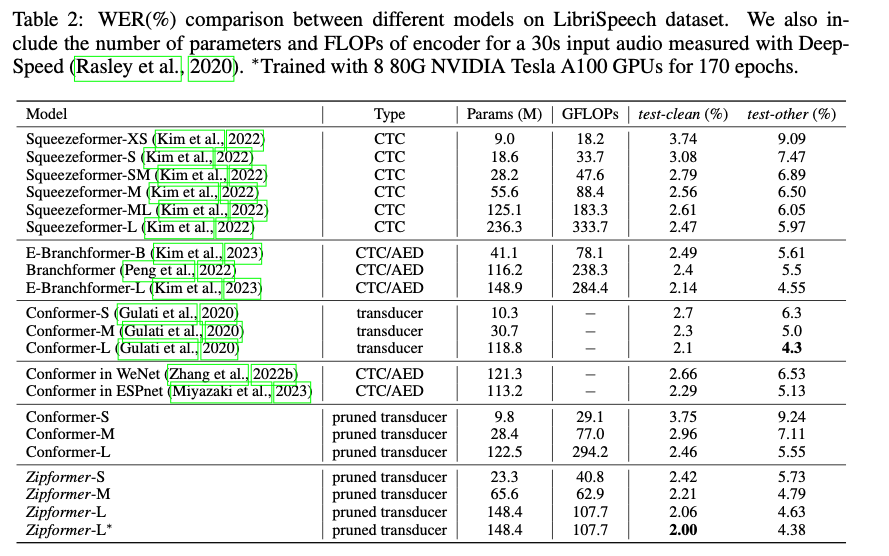

4.2 Comparison

In overall, Zipformer models achieves better trade-off between performance and efficiency than other models.

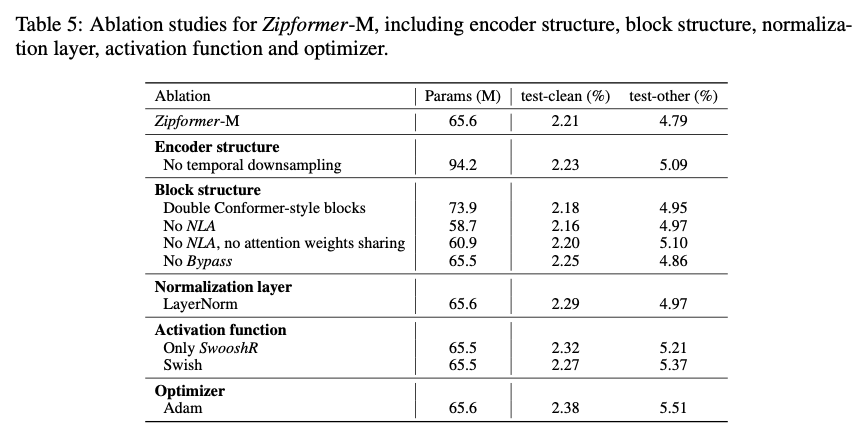

4.3 Ablation Studies

- Encoder structure

- temporal downsampling structure -> Conv-Embed with downsampling rate of 4 (like Conformer).

- The resulting model has 12 Zipformer blocks with a constant embedding dimension of 512 and has more parameters than the base model.

- result shows that the temporal downsampling structure for efficiency does not cause information loss, but facilitates the modeling capacity with less parameters.

- Block structure.

- As each Zipformer block has roughly twice modules as a Conformer block, we replace each Zipformer block in the base model with two Conformer blocks stacked together.

- If we further remove the attention weights sharing mechanism after removing NLA, the model has slightly more parameters and slower inference speed, but the WERs are not improved.

- We hypothesize that the two attention weights inside one Zipformer block are quite consistent and sharing them does not harm the model.