728x90

1. Why do we need activation function?

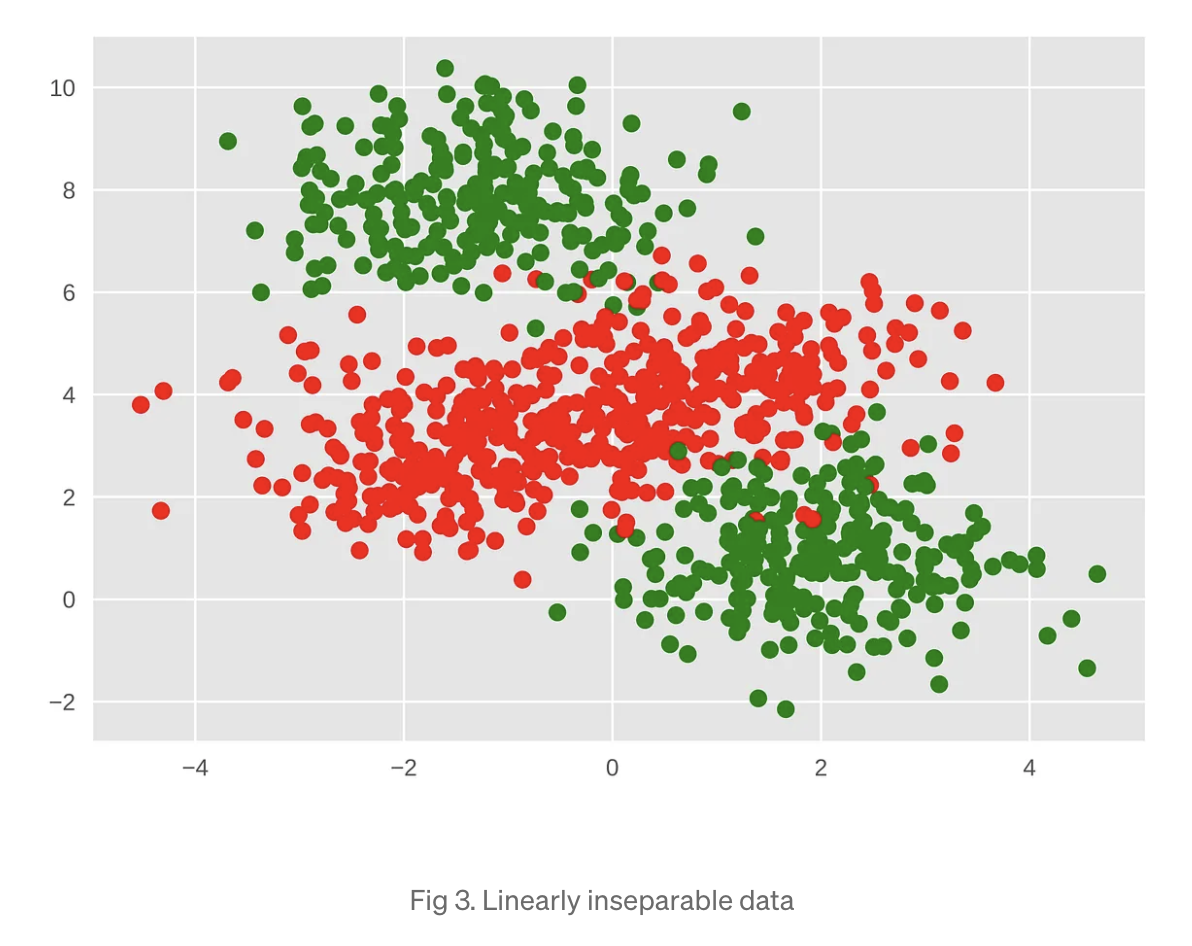

learns the function f which best determines the relationship between input x and output y.

-> Unfortunately this relation in most cases is non-linear

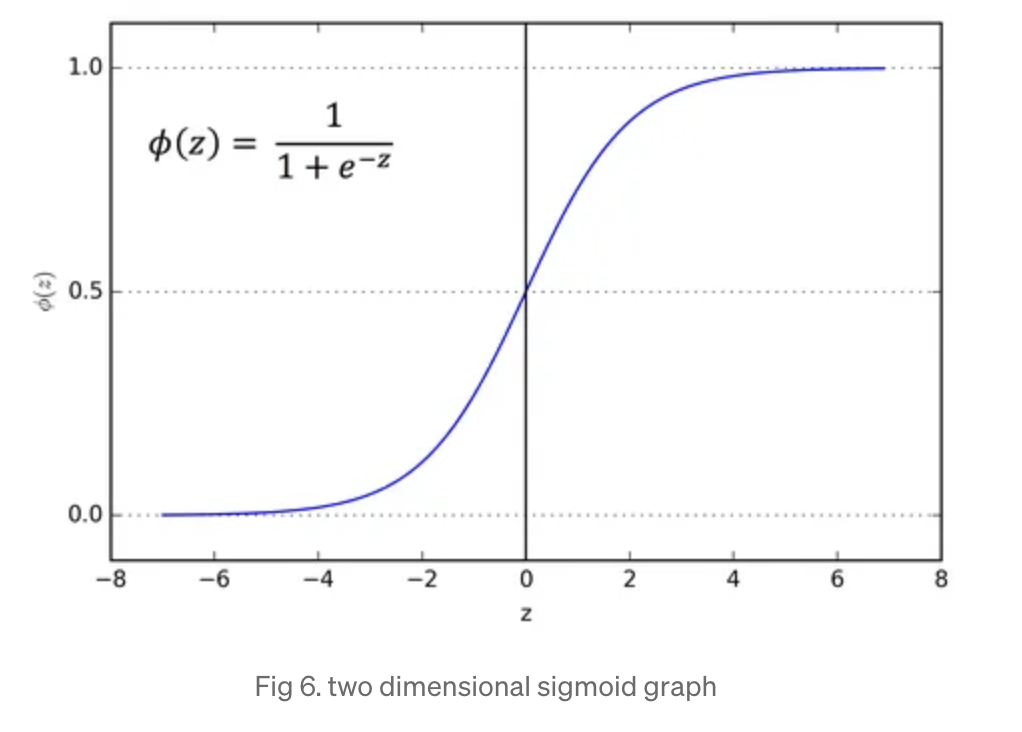

For example a single sigmoid function looks like this.

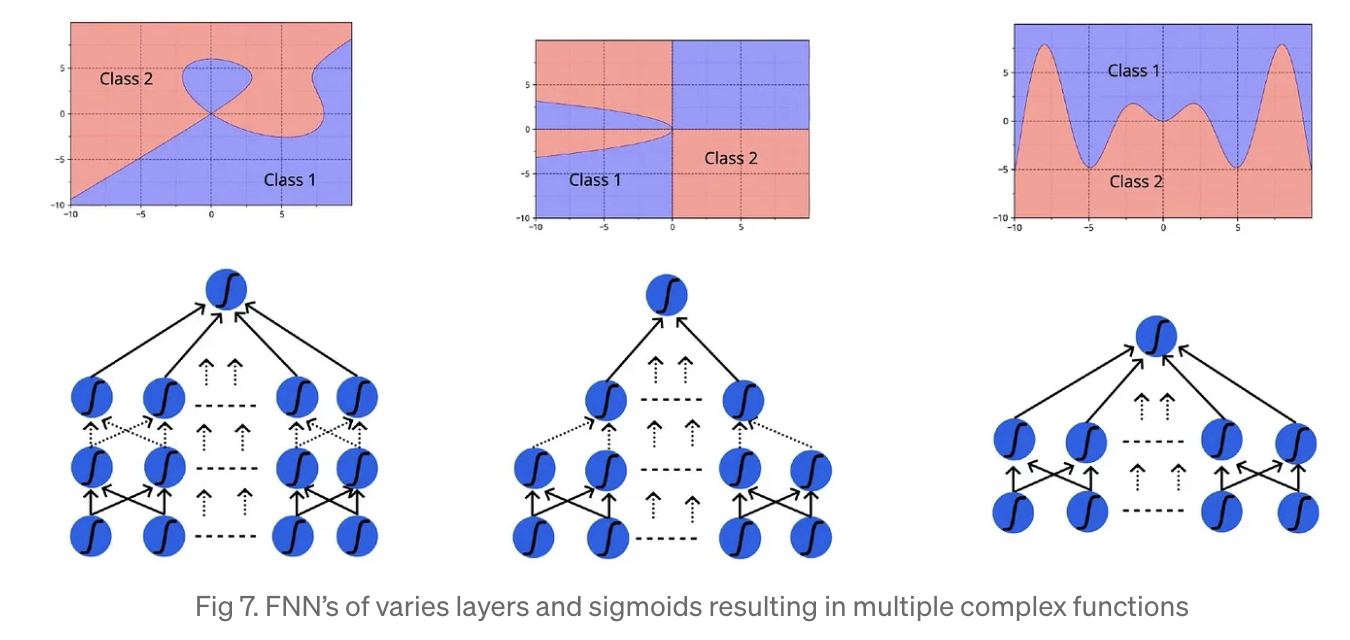

Now, by joining multiple such sigmoid curves at different layers of FNN,

you can almost figure out any complex relations between input and output.

- 이론적으로는 2층으로 network를 쌓더라도 임의의 연속함수를 근사할 수 있다.

- 하지만 층을 많이 쌓을 수록 근사에 필요한 neuron의 수가 훨신 많이 줄어 들기 때문에 효율적 학습이 가능하다.

728x90

반응형

'boostcamp AI tech > boostcamp AI' 카테고리의 다른 글

| BPTT for RNN (0) | 2023.08.26 |

|---|---|

| Recurrent Neural Networks (0) | 2023.08.23 |

| Convolutional Neural Network (0) | 2023.08.23 |

| Autoencoder (0) | 2023.08.17 |

| Cost functions and Gradient descent (0) | 2023.08.17 |