The most important thing in deep learning is data. We are handling speech so first, we have to know about how to extract feature from audio.

By extracting feature from audio, we can represent audio into vector and it means that a model is ready to learn.

1. MFCC (Mel-Spectogram)

Simply say, MFCC is transforming audio data into feature vector.

By using librosa python library we can simply extract feature vectors from audio

import librosa

def get_librosa_mfcc(path, n_mfcc: int = 40):

SAMPLE_RATE = 16000

HOP_LENGTH = 128

N_FFT = 512

signal, sr = librosa.core.load(path, SAMPLE_RATE)

return librosa.feature.mfcc(signal, sr, hop_length=HOP_LENGTH, n_fft=N_FFT)2. Mel-scale

Humans detect low frequency more than high frequency. So when we are extracting features from audio. It is more effective to extract more low frequency and less high frequency. We call this kind of extracting feature technique mel-scale.

3. Short-Time Fourier Transform

The length of "Hello" varies from person to person. So if you extract Mel-Scale from whole audio, It is hard to let model learn hello and the feature vectors.

Based on a lot of researches, we consider that in 20~40ms, phoneme can't be changed.

so we divide audio into 20~40ms frames and extract features from each splitted frames.

4. Again MFCC

This is the raw signal of audio.

1. Pre-Emphasis

Human's voices' high frequency part is reduced when it came out. so we apply high-pass filter on audio to amplify high frequency feature.

2. Sampling and Windowing

After that, we divide audio into small(20~40ms) frames.

Notice that the next frame starts from the half point of the before frame. so that they can intersect same part of audio. This continuity is needed to avoid the the amount of changes of two frames becoming infinte.

After slicing the signal into frames, apply hamming window to each frame.

3. Fourier Transform

Now apply Fourier Transform on each frame. Get frequency distribution from each frame.

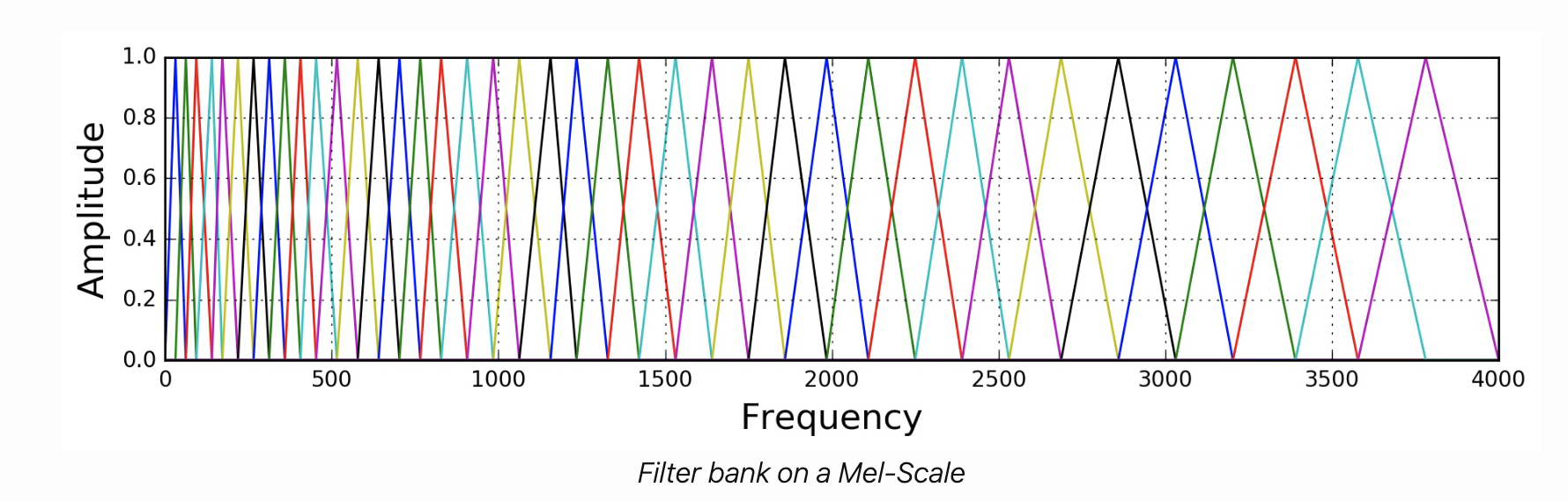

4. Mel Filter Bank

To mimic human ear perception we apply Mel Filter on the result of Fourier Transform, which makes high frequency smaller and low frequency bigger.

The filter(triangle) size become bigger when the frequency gets higher.

After applying the filter bank to the power spectrum (periodogram) of the signal, we obtain the following spectrogram

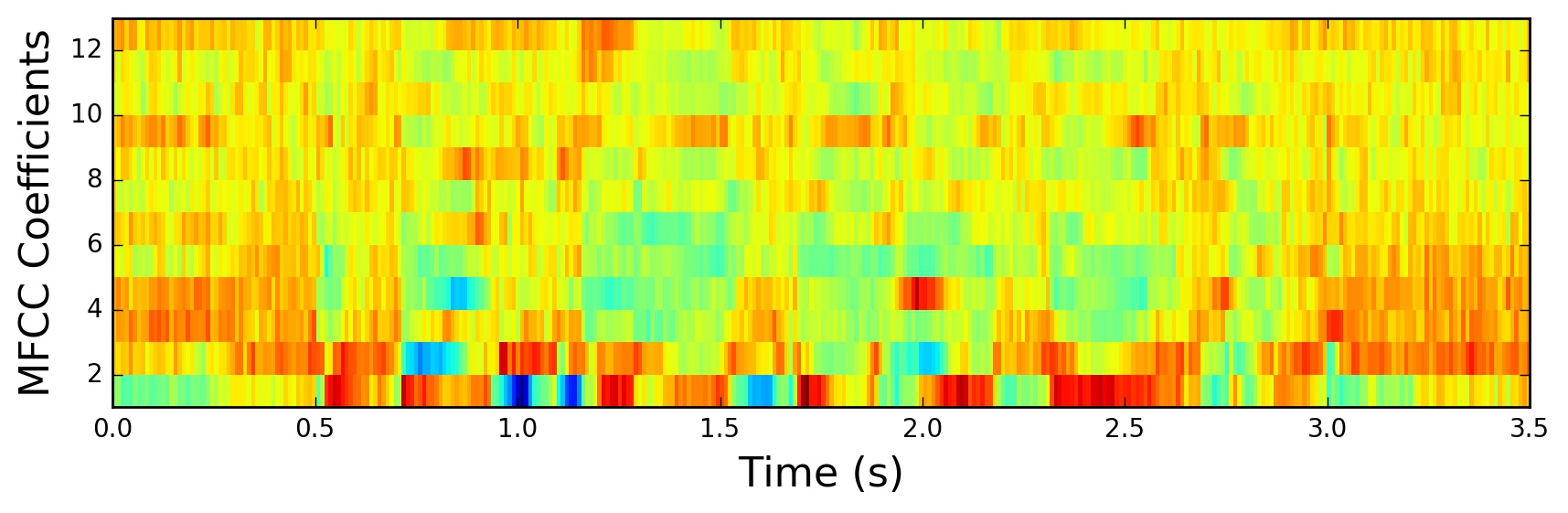

5. MFCCs

To decorrelate the filter bank coefficients apply Discrete Cosine Transform (DCT)

The resulting MFCCs:

5. Filter Banks vs MFCCs

one might question if MFCCs are still the right choice given that deep neural networks are less susceptible to highly correlated input and therefore the Discrete Cosine Transform (DCT) is no longer a necessary step. It is beneficial to note that Discrete Cosine Transform (DCT) is a linear transformation, and therefore undesirable as it discards some information in speech signals which are highly non-linear.

Use Mel-scaled filter banks if the machine learning algorithm is not susceptible to highly correlated input.

Use MFCCs if the machine learning algorithm is susceptible to correlated input.

Speech Processing for Machine Learning: Filter banks, Mel-Frequency Cepstral Coefficients (MFCCs) and What’s In-Between

Understanding and computing filter banks and MFCCs and a discussion on why are filter banks becoming increasingly popular.

haythamfayek.com

'ComputerScience > 기타' 카테고리의 다른 글

| tmux 사용법 (0) | 2022.09.27 |

|---|---|

| local에서 ssh tunneling으로 원격 서버의 jupyter notebook 접속하기 (0) | 2022.09.23 |

| Speech Recognition - End to End Models for Speech Recognition (0) | 2022.09.17 |

| node - 16. 서버리스 노드 개발 (0) | 2022.01.08 |

| export .ipynb to PDF (at Colab) (0) | 2021.09.29 |